Despite the immense attention and investment paid to digital transformation, most businesses still miss the most critical part of their evolution: data. It doesn’t matter if artificial intelligence (AI), process automation, bots, or predictive analytics is adopted. Without high-quality data, these core technologies can never truly benefit any company.

If your company has yet to catch on to the importance of data quality, it’s not too late. Though Big Data has been around since the early nineties, the value and strong focus on data has increased drastically in the last decade. Since then, some organizations have undergone a continuous cycle of work realignment, process refinement, and business model innovation to reap the benefits of this information gold mine.

Companies that leverage their data want to create more value for their customers and themselves. But the concept of “value” is broad and depends on a defined set of strategic goals, technology adoption, and data operationalization. In most cases, businesses that cite “value” as the ultimate outcome of their transformation efforts really mean boosting efficiency, optimizing supply chain flows, or using economies of scale to improve customer relationships.

So, how can organizations use data effectively? According to Edwards Deming and Peter Drucker, “You can’t manage what you don’t measure.”

Clearing the Path to Pivotal Change

To measure data use and find areas for improvements, many companies have created a new role within their C-level ranks – the chief data officer (CDO) – over the last eight years. This executive is tasked with defining the company-wide data strategy, including controlling, governing, and managing data-driven engagements to shape the business into an intelligent enterprise. One operational example is the cleanup of inaccurate, incomplete, and duplicated information – also known as “dirty data” – residing within the digital infrastructure.

Gartner describes “dirty data” as information that is inaccurate, incomplete, and pervaded of duplicates, impacting customer turnover, expense management, sales opportunities, and back-office functions. Therefore, companies should address this issue with the following practices based on a part of Gartner’s basic principles of data quality management:

- Consistency: Data is stored in one or multiple locations with equal values.

- Accuracy: Data value is consistent across the target model.

- Validity: Data values are within a certain predefined range or domain.

- Integrity: Relationships between data values are complete.

- Relevance: Data holds the right information to support the business.

Improving data quality to the point where any digital transformation gains a beneficial edge is like losing weight – it takes special effort to attain it and consistency to maintain it. With a focused mindset and healthy habits, companies can leverage their data to stay relevant and financially stable with room for future growth and new business models.

Managing Data with a Principled Approach

While approaches to assessing data quality are numerous, some methods work better than others. For example, many companies use the Friday afternoon measurement method, known as FAM. Using FAM, one assesses the most common errors within the last 100 data records, such as sales orders or business partner information, on a paper-sheet table to derive an improvement strategy for corrupted data sets.

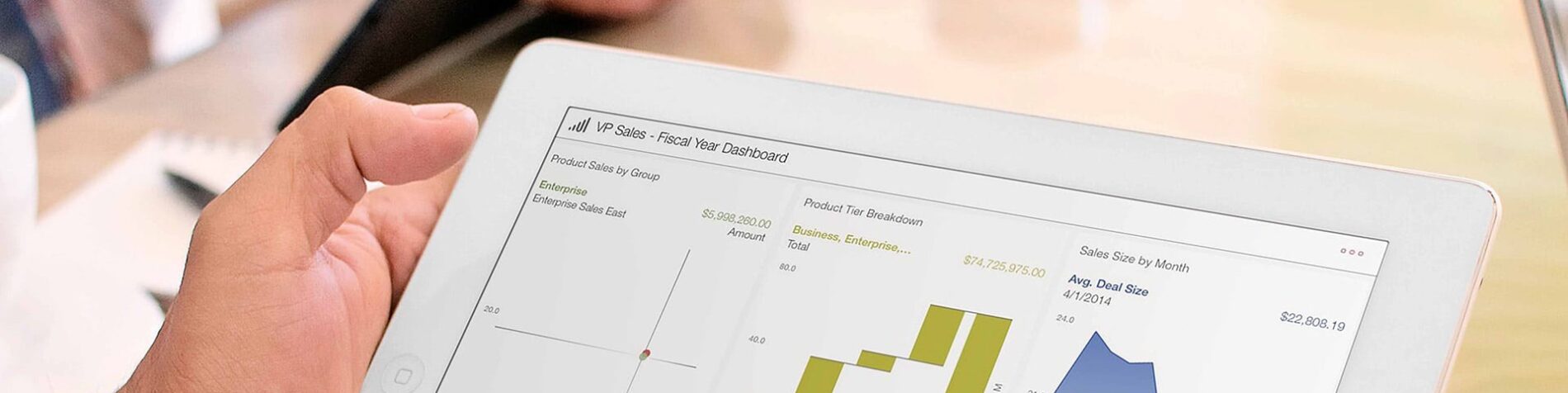

FAM may not be the best solution for most organizations. We recommend an online tool, namely a data quality dashboard. This online tool provides real-time insights into a company’s overall data health, uncovering all inefficiencies across different domains to be addressed by the CDO organization in one location, millions at a time. A rules framework combined with innovation technologies, such as machine learning and intelligent robotic process automation (iRPA), provides real-time insight to action across all systems in which master data is stored to restore inconsistencies.

1. Data consistency is evaluated in terms of quantity, not quality.

To enhance key performance indicators for data quality, organizations should consider a specific master data domain, such as material master data, against its availability in cloud-based or on-premise systems. This could be helpful if master data is scattered across different landscapes.

Master data records must also be checked for duplicate values based on the variety of their attributes. Duplicates, followed by missing entries, are the most common occurrence within corrupted data. To support the duplicate search, further aspects, like data accuracy, need to be taken into consideration.

2. Data accuracy is measured by the format and content of defined data sets.

The use of country-specific data formats – such as the structural difference between European and American dates – can significantly impact a company’s ability to deliver tangible outcomes. Decision-makers can never assume that data means the same to everyone.

A popular use case for verifying content for accuracy is the handling of workflows and abbreviations. Following logic like “shipment date must not come before the order date” can help ensure the dates on specific activities communicate the same insight to everyone involved in the delivery process.

Further content-related accuracy checks are necessary for homogenous data sets. Abbreviations should be set to a company-wide standard. For instance, in the “City” field, “New York” must be appear as “NYC,” not “NY.” Such checks will be useful also in the duplicate search, once applied.

3. Data validity checks are a recurring task.

As a byproduct of manual processes or reorganizations, companies often have addresses that are only up to date for a specific time frame. A dedicated business rule can frequently check time frames against a specified date, mark affected records, and alert the data quality responsible to derive actions. Such checks need to be done on a frequent basis.

4. Data integrity is assessed to identify data sets with a recurring pattern.

Key performance indicators for meaningful data quality should consider data integrity patterns from all master data domains. For example, detecting a recurring data pattern could be as simple as programming a required field as mandatory to capture necessary insights. However, a more complex scenario may also be applied to classify a material code to a specific characteristic category or plant location. Patterns within the integrity key performance indicator (KPI) must be of major weighting within the overall data quality KPI.

5. Data relevance is achieved through continuous maintenance.

After setting up rules for deriving meaningful data quality, the dashboard setup still requires attention to help ensure users can drill down to the line item level for dynamic, real-time, and actionable insight. Directing analytics results generated by the dashboard to the maintenance layer allows CDOs to easily improve data quality by opening service requests. Furthermore, user feedback from service requests can serve as input to improve rule composition. This feedback should be the starting point of a cycle to resolve and refine the rules and data issues.

Focusing on Data Improvement and Consistency at Scale

Every company needs a single source of truth to pinpoint their weaknesses and inefficiencies. Fortunately, innovations in mobility, artificial intelligence, process automation, bots, and predictive analytics are making data creation and editing more efficient and convenient than ever before.

But to be truly successful, businesses must take a quantum leap in data quality with the assistance of tools such as a CDO dashboard. Why? Because data quality is the heart of every digital transformation and a must-have for every company pursuing an increasingly digital marketplace.

Explore how SAP Advisory Services can help energize your business’s digital transformation with a flexible and scalable rule framework for data quality management.

Stay in the conversation by following SAP Services and Support

on YouTube, Twitter, LinkedIn, and Facebook.

Pascal Angerhausen is business transformation lead for CIO Advisory at SAP.

Tobias Fischer is data architect for CIO Advisory at SAP.